Breaking News

Part 4: Immigration Is Killing America: Here Are The Results Coming

2026-03-05 Ernest Hancock interviews Dr Phranq Tamburri (Trump Report) MP3 (MP4 to be loaded shortly

2026-03-05 Ernest Hancock interviews Dr Phranq Tamburri (Trump Report) MP3 (MP4 to be loaded shortly

S3E8: Your Money, Your Data, Your Blood, All Stolen

S3E8: Your Money, Your Data, Your Blood, All Stolen

The Pentagon is looking for the SpaceX of the ocean.

The Pentagon is looking for the SpaceX of the ocean.

Top Tech News

The Pentagon is looking for the SpaceX of the ocean.

The Pentagon is looking for the SpaceX of the ocean.

Major milestone by 3D printing an artificial cornea using a specialized "bioink"...

Major milestone by 3D printing an artificial cornea using a specialized "bioink"...

Scientists at Rice University have developed an exciting new two-dimensional carbon material...

Scientists at Rice University have developed an exciting new two-dimensional carbon material...

Footage recorded by hashtag#Meta's AI smart glasses is sent to offshore contractors...

Footage recorded by hashtag#Meta's AI smart glasses is sent to offshore contractors...

ELON MUSK: "With something like Neuralink… we effectively become maybe one with the AI."

ELON MUSK: "With something like Neuralink… we effectively become maybe one with the AI."

DARPA Launches New Program Generative Optogenetics, GO,...

DARPA Launches New Program Generative Optogenetics, GO,...

Anthropic Outpaces OpenAI Revenue 10X, Pentagon vs. Dario, Agents Rent Humans | #234

Anthropic Outpaces OpenAI Revenue 10X, Pentagon vs. Dario, Agents Rent Humans | #234

Ordering a Tiny House from China, what's the real COST?

Ordering a Tiny House from China, what's the real COST?

New video may offer glimpse of secret F-47 fighter

New video may offer glimpse of secret F-47 fighter

Donut Lab's Solid-State Battery Charges Fast. But Experts Still Have Questions

Donut Lab's Solid-State Battery Charges Fast. But Experts Still Have Questions

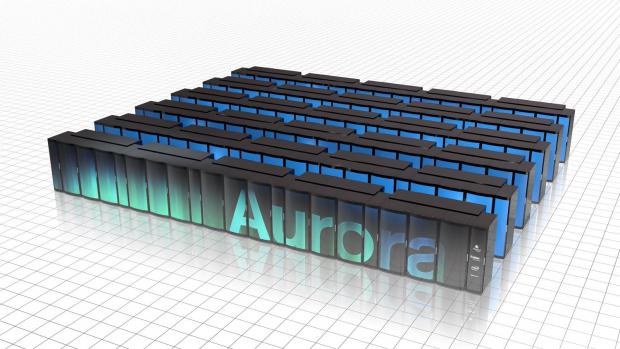

Argonne National Lab Polaris Pre-Exascale Supercomputer

U.S. Department of Energy's Argonne National Laboratory and Hewlett Packard Enterprise (NYSE: HPE) unveiled a new testbed supercomputer to prepare critical workloads for future exascale systems that will deliver up to four times faster performance than Argonne's current supercomputers.

Polaris will enable scientists and developers to test and optimize software codes and applications to tackle a range of artificial intelligence (AI), engineering and scientific projects planned for the forthcoming exascale supercomputer, Aurora, a joint collaboration between Argonne, Intel and HPE.

The $500+ million Exaflop Aurora was planned for 2021 but it has been delayed until 2022-2023. Aurora has been delayed waiting for Intel's Sapphire Rapids server chips. The first plan was for a 180 petaflop Aurora for 2018 but delays in earlier Intel chips caused the need for a new plan.

Polaris will deliver approximately 44 petaflops of peak double precision performance and nearly 1.4 exaflops of theoretical AI performance, which is based on mixed-precision compute capabilities. Polaris 1.4 AI ExaFLOPS does not use standard FP64 (64 bit floating point) for standard supercomputer performance metrics.