Breaking News

WATCH: "Let This Serve as Notice… BEWARE!" - Trump Posts EPIC Video...

WATCH: "Let This Serve as Notice… BEWARE!" - Trump Posts EPIC Video...

OSL 115 - Why I Am Done At Infowars

OSL 115 - Why I Am Done At Infowars

EXCLUSIVE: Alex Jones Exposes Why Owen Shroyer Really Quit...

EXCLUSIVE: Alex Jones Exposes Why Owen Shroyer Really Quit...

I asked A.I. to design the ultimate FPV Flying Wing... and it's INSANELY EFFICIENT!

I asked A.I. to design the ultimate FPV Flying Wing... and it's INSANELY EFFICIENT!

Top Tech News

Neuroscientists just found a hidden protein switch in your brain that reverses aging and memory loss

Neuroscientists just found a hidden protein switch in your brain that reverses aging and memory loss

NVIDIA just announced the T5000 robot brain microprocessor that can power TERMINATORS

NVIDIA just announced the T5000 robot brain microprocessor that can power TERMINATORS

Two-story family home was 3D-printed in just 18 hours

Two-story family home was 3D-printed in just 18 hours

This Hypersonic Space Plane Will Fly From London to N.Y.C. in an Hour

This Hypersonic Space Plane Will Fly From London to N.Y.C. in an Hour

Magnetic Fields Reshape the Movement of Sound Waves in a Stunning Discovery

Magnetic Fields Reshape the Movement of Sound Waves in a Stunning Discovery

There are studies that have shown that there is a peptide that can completely regenerate nerves

There are studies that have shown that there is a peptide that can completely regenerate nerves

Swedish startup unveils Starlink alternative - that Musk can't switch off

Swedish startup unveils Starlink alternative - that Musk can't switch off

Video Games At 30,000 Feet? Starlink's Airline Rollout Is Making It Reality

Video Games At 30,000 Feet? Starlink's Airline Rollout Is Making It Reality

Grok 4 Vending Machine Win, Stealth Grok 4 coding Leading to Possible AGI with Grok 5

Grok 4 Vending Machine Win, Stealth Grok 4 coding Leading to Possible AGI with Grok 5

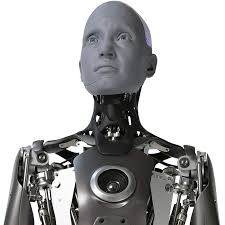

Will AI need a body to come close to human-like intelligence?

But my first disembodied AI was Joshua, the computer in WarGames who tried to start a nuclear war – until it learned about mutually assured destruction and chose to play chess instead.

At age seven, this changed me. Could a machine understand ethics? Emotion? Humanity? Did artificial intelligence need a body? These fascinations deepened as the complexity of non-human intelligence did with characters like the android Bishop in Aliens, Data in Star Trek: TNG, and more recently with Samantha in Her, or Ava in Ex Machina.

But these aren't just speculative questions anymore. Roboticists today are wrestling with the question of whether artificial intelligence needs a body? And if so, what kind?

And then there's the "how" of it all; if embodied intelligence is the way forward to true artificial general intelligence (AGI), could soft robots be the key to that next step?

The limits of disembodied AI

Recent papers are beginning to show the cracks in today's most advanced (and notably disembodied) AI systems. A new study from Apple examined so-called "Large Reasoning Models" (LRMs) – language models that generate reasoning steps before answering. These systems, the paper notes, perform better than standard LLMs on many tasks, but fall apart when problems get too complex. Strikingly, they don't just plateau – they collapse, even when given more than enough computing power.

Worse, they fail to reason consistently or algorithmically. Their "reasoning traces" – how they work through problems – lack internal logic. And the more complex the challenge, the less effort the models seem to expend. These systems, the authors conclude, don't really "think" the way humans do.

"What we are building now are things that take in words and predict the next most likely word ... That's very different from what you and I do," Nick Frosst, a former Google researcher and co-founder of Cohere, told The New York Times.

Cognition is more than just computation

How did we get here? For much of the 20th century, artificial intelligence followed a model called GOFAI – "Good Old-Fashioned Artificial Intelligence" – which treated cognition as symbolic logic. Early AI researchers believed intelligence could be built by processing symbols, much like a computer executes code. Abstract, symbol-based thinking certainly doesn't need a body to advance.

This idea began to fray when early robot AI failed to handle messy, real-world conditions. Researchers in psychology, neuroscience, and philosophy began to ask a different question, rooted in greater understandings that came from studies of animal and plant intelligences which all adapt, learn and respond to complex environmental conditions. These organisms learn through physical interactions, not symbolic ideas.

HERE COMES THE MOTHERSHIP

HERE COMES THE MOTHERSHIP