Breaking News

2 Hours of Retro Sci-Fi Christmas Songs | Atomic-Age Christmas at a Snowy Ski Resort

2 Hours of Retro Sci-Fi Christmas Songs | Atomic-Age Christmas at a Snowy Ski Resort

Alternative Ways to Buy Farmland

Alternative Ways to Buy Farmland

LED lights are DEVASTATING our bodies, here's why | Redacted w Clayton Morris

LED lights are DEVASTATING our bodies, here's why | Redacted w Clayton Morris

Top Tech News

Travel gadget promises to dry and iron your clothes – totally hands-free

Travel gadget promises to dry and iron your clothes – totally hands-free

Perfect Aircrete, Kitchen Ingredients.

Perfect Aircrete, Kitchen Ingredients.

Futuristic pixel-raising display lets you feel what's onscreen

Futuristic pixel-raising display lets you feel what's onscreen

Cutting-Edge Facility Generates Pure Water and Hydrogen Fuel from Seawater for Mere Pennies

Cutting-Edge Facility Generates Pure Water and Hydrogen Fuel from Seawater for Mere Pennies

This tiny dev board is packed with features for ambitious makers

This tiny dev board is packed with features for ambitious makers

Scientists Discover Gel to Regrow Tooth Enamel

Scientists Discover Gel to Regrow Tooth Enamel

Vitamin C and Dandelion Root Killing Cancer Cells -- as Former CDC Director Calls for COVID-19...

Vitamin C and Dandelion Root Killing Cancer Cells -- as Former CDC Director Calls for COVID-19...

Galactic Brain: US firm plans space-based data centers, power grid to challenge China

Galactic Brain: US firm plans space-based data centers, power grid to challenge China

A microbial cleanup for glyphosate just earned a patent. Here's why that matters

A microbial cleanup for glyphosate just earned a patent. Here's why that matters

Japan Breaks Internet Speed Record with 5 Million Times Faster Data Transfer

Japan Breaks Internet Speed Record with 5 Million Times Faster Data Transfer

AI voice cloning from a few seconds of voice sampling is real and rapidly improving

There are examples of speech sample recordings and synthesized speech based on different numbers of samples. The synthesized speech had some noise distortion but the samples did sound like the original speakers.

Baidu attempted to learn speaker characteristics from only a few utterances (i.e., sentences of few seconds duration). This problem is commonly known as "voice cloning." Voice cloning is expected to have significant applications in the direction of personalization in human-machine interfaces.

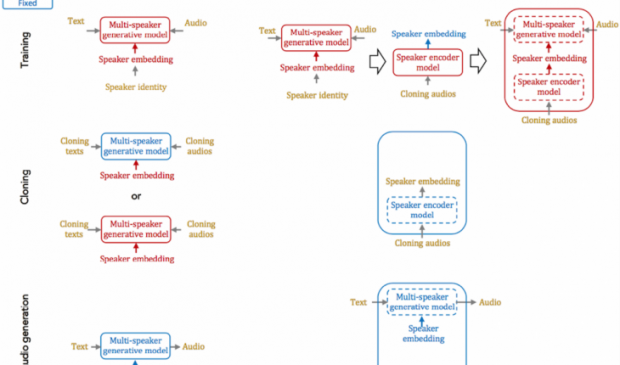

They tried two fundamental approaches for solving the problems with voice cloning: speaker adaptation and speaker encoding.

Speaker adaptation is based on fine-tuning a multi-speaker generative model with a few cloning samples, by using backpropagation-based optimization. Adaptation can be applied to the whole model, or only the low-dimensional speaker embeddings. The latter enables a much lower number of parameters to represent each speaker, albeit it yields a longer cloning time and lower audio quality.

$100 SILVER CONFIRMED?

$100 SILVER CONFIRMED?