Breaking News

Over 30 years to bring common sense back to America.

Over 30 years to bring common sense back to America.

New battery idea gets lots of power out of unusual sulfur chemistry

New battery idea gets lots of power out of unusual sulfur chemistry

Next Issue Of The Wild Bunch: AI Threats Patriots Should Prepare For

Next Issue Of The Wild Bunch: AI Threats Patriots Should Prepare For

A Mortician Explains Why This Photo Doesn't Make Sense

A Mortician Explains Why This Photo Doesn't Make Sense

Top Tech News

Kawasaki's four-legged robot-horse vehicle is going into production

Kawasaki's four-legged robot-horse vehicle is going into production

The First Production All-Solid-State Battery Is Here, And It Promises 5-Minute Charging

The First Production All-Solid-State Battery Is Here, And It Promises 5-Minute Charging

See inside the tech-topia cities billionaires are betting big on developing...

See inside the tech-topia cities billionaires are betting big on developing...

Storage doesn't get much cheaper than this

Storage doesn't get much cheaper than this

Laser weapons go mobile on US Army small vehicles

Laser weapons go mobile on US Army small vehicles

EngineAI T800: Born to Disrupt! #EngineAI #robotics #newtechnology #newproduct

EngineAI T800: Born to Disrupt! #EngineAI #robotics #newtechnology #newproduct

This Silicon Anode Breakthrough Could Mark A Turning Point For EV Batteries [Update]

This Silicon Anode Breakthrough Could Mark A Turning Point For EV Batteries [Update]

Travel gadget promises to dry and iron your clothes – totally hands-free

Travel gadget promises to dry and iron your clothes – totally hands-free

Perfect Aircrete, Kitchen Ingredients.

Perfect Aircrete, Kitchen Ingredients.

Futuristic pixel-raising display lets you feel what's onscreen

Futuristic pixel-raising display lets you feel what's onscreen

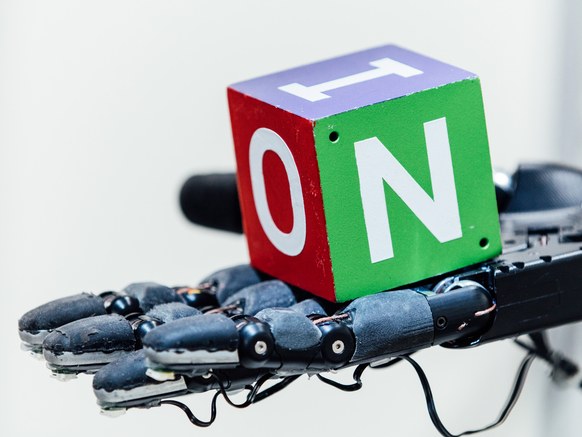

This Robot Hand Taught Itself How to Grab Stuff Like a Human

So he helped found a research nonprofit, OpenAI, to help cut a path to "safe" artificial general intelligence, as opposed to machines that pop our civilization like a pimple. Yes, Musk's very public fears may distract from other more real problems in AI. But OpenAI just took a big step toward robots that better integrate into our world by not, well, breaking everything they pick up.

OpenAI researchers have built a system in which a simulated robotic hand learns to manipulate a block through trial and error, then seamlessly transfers that knowledge to a robotic hand in the real world. Incredibly, the system ends up "inventing" characteristic grasps that humans already commonly use to handle objects. Not in a quest to pop us like pimples—to be clear.

The researchers' trick is a technique called reinforcement learning. In a simulation, a hand, powered by a neural network, is free to experiment with different ways to grasp and fiddle with a block. "It's just doing random things and failing miserably all the time," says OpenAI engineer Matthias Plappert. "Then what we do is we give it a reward whenever it does something that slightly moves it toward the goal it actually wants to achieve, which is rotating the block."