Breaking News

We Americans Need to Dig Deep into Historical Perspective

A timeless clip of Michael Burry explaining how he used credit default swaps...

A timeless clip of Michael Burry explaining how he used credit default swaps...

The next financial crisis won't start in a bank lobby. It's already brewing in the market

The next financial crisis won't start in a bank lobby. It's already brewing in the market

Top Tech News

This tiny dev board is packed with features for ambitious makers

This tiny dev board is packed with features for ambitious makers

Scientists Discover Gel to Regrow Tooth Enamel

Scientists Discover Gel to Regrow Tooth Enamel

Vitamin C and Dandelion Root Killing Cancer Cells -- as Former CDC Director Calls for COVID-19...

Vitamin C and Dandelion Root Killing Cancer Cells -- as Former CDC Director Calls for COVID-19...

Galactic Brain: US firm plans space-based data centers, power grid to challenge China

Galactic Brain: US firm plans space-based data centers, power grid to challenge China

A microbial cleanup for glyphosate just earned a patent. Here's why that matters

A microbial cleanup for glyphosate just earned a patent. Here's why that matters

Japan Breaks Internet Speed Record with 5 Million Times Faster Data Transfer

Japan Breaks Internet Speed Record with 5 Million Times Faster Data Transfer

Advanced Propulsion Resources Part 1 of 2

Advanced Propulsion Resources Part 1 of 2

PulsarFusion a forward-thinking UK aerospace company, is pushing the boundaries of space travel...

PulsarFusion a forward-thinking UK aerospace company, is pushing the boundaries of space travel...

Dinky little laser box throws big-screen entertainment from inches away

Dinky little laser box throws big-screen entertainment from inches away

'World's first' sodium-ion flashlight shines bright even at -40 ºF

'World's first' sodium-ion flashlight shines bright even at -40 ºF

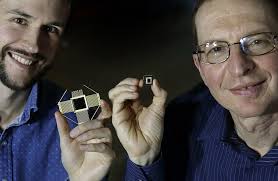

Breakthroughs in Neuromorphic Computing Could Speed Computers and AI By Ten Times

The new system is parallel programming of an ionic floating-gate memory array, which allows large amounts of information to be processed simultaneously in a single operation. The research is inspired by the human brain, where neurons and synapses are connected in a dense matrix and information is processed and stored at the same location.

Sandia researchers demonstrated the ability to adjust the strength of the synaptic connections in the array using parallel computing. This will allow computers to learn and process information at the point it is sensed, rather than being transferred to the cloud for computing, greatly improving speed and efficiency and reducing the amount of power used.

Through machine learning technology, mainstream digital applications can today recognize and understand complex patterns in data. For example, popular virtual assistants, such as Amazon.com Inc.'s Alexa or Apple Inc.'s Siri, sort through large streams of data to understand voice commands and improve over time.

With the dramatic expansion of machine learning algorithms in recent years, applications are now demanding larger amounts of data storage and power to complete these difficult tasks. Traditional digital computing architecture is not designed or optimized for artificial neural networks that are the essential part of machine learning.

The AI money machine!

The AI money machine!