Breaking News

America's Streets Are Filled With Poop, And Billions Of Gallons Of Untreated Wastewater...

America's Streets Are Filled With Poop, And Billions Of Gallons Of Untreated Wastewater...

3 Million Pages of Child Sex Trafficking, So, What Is the FBI Doing?

3 Million Pages of Child Sex Trafficking, So, What Is the FBI Doing?

Communists Once Again Suck At Hockey

Communists Once Again Suck At Hockey

Grand Theft World Podcast 274 | Epstein Apocalypse with Guest Santos Bonacci

Grand Theft World Podcast 274 | Epstein Apocalypse with Guest Santos Bonacci

Top Tech News

New Spray-on Powder Instantly Seals Life-Threatening Wounds in Battle or During Disasters

New Spray-on Powder Instantly Seals Life-Threatening Wounds in Battle or During Disasters

AI-enhanced stethoscope excels at listening to our hearts

AI-enhanced stethoscope excels at listening to our hearts

Flame-treated sunscreen keeps the zinc but cuts the smeary white look

Flame-treated sunscreen keeps the zinc but cuts the smeary white look

Display hub adds three more screens powered through single USB port

Display hub adds three more screens powered through single USB port

We Finally Know How Fast The Tesla Semi Will Charge: Very, Very Fast

We Finally Know How Fast The Tesla Semi Will Charge: Very, Very Fast

Drone-launching underwater drone hitches a ride on ship and sub hulls

Drone-launching underwater drone hitches a ride on ship and sub hulls

Humanoid Robots Get "Brains" As Dual-Use Fears Mount

Humanoid Robots Get "Brains" As Dual-Use Fears Mount

SpaceX Authorized to Increase High Speed Internet Download Speeds 5X Through 2026

SpaceX Authorized to Increase High Speed Internet Download Speeds 5X Through 2026

Space AI is the Key to the Technological Singularity

Space AI is the Key to the Technological Singularity

Velocitor X-1 eVTOL could be beating the traffic in just a year

Velocitor X-1 eVTOL could be beating the traffic in just a year

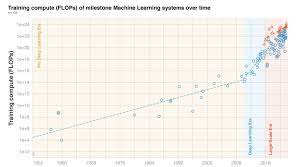

Three Eras of Machine Learning and Predicting the Future of AI

They show :

before 2010 training compute grew in line with Moore's law, doubling roughly every 20 months.

Deep Learning started in the early 2010s and the scaling of training compute has accelerated, doubling approximately every 6 months.

In late 2015, a new trend emerged as firms developed large-scale ML models with 10 to 100-fold larger requirements in training compute.

Based on these observations they split the history of compute in ML into three eras: the Pre Deep Learning Era, the Deep Learning Era and the Large-Scale Era . Overall, the work highlights the fast-growing compute requirements for training advanced ML systems.

They have detailed investigation into the compute demand of milestone ML models over time. They make the following contributions:

1. They curate a dataset of 123 milestone Machine Learning systems, annotated with the compute it took to train them.