Breaking News

Episode 403: THE POLITICS OF POLIO

Episode 403: THE POLITICS OF POLIO

Google Versus xAI AI Compute Scaling

Google Versus xAI AI Compute Scaling

OpenAI Releases O3 Model With High Performance and High Cost

OpenAI Releases O3 Model With High Performance and High Cost

WE FOUND OUT WHAT THE DRONES ARE!! ft. Dr. Steven Greer

WE FOUND OUT WHAT THE DRONES ARE!! ft. Dr. Steven Greer

Top Tech News

"I am Exposing the Whole Damn Thing!" (MIND BLOWING!!!!) | Randall Carlson

"I am Exposing the Whole Damn Thing!" (MIND BLOWING!!!!) | Randall Carlson

Researchers reveal how humans could regenerate lost body parts

Researchers reveal how humans could regenerate lost body parts

Antimatter Propulsion Is Still Far Away, But It Could Change Everything

Antimatter Propulsion Is Still Far Away, But It Could Change Everything

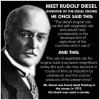

Meet Rudolph Diesel, inventor of the diesel engine

Meet Rudolph Diesel, inventor of the diesel engine

China Looks To Build The Largest Human-Made Object In Space

China Looks To Build The Largest Human-Made Object In Space

Ferries, Planes Line up to Purchase 'Solar Diesel' a Cutting-Edge Low-Carbon Fuel...

Ferries, Planes Line up to Purchase 'Solar Diesel' a Cutting-Edge Low-Carbon Fuel...

"UK scientists have created an everlasting battery in a diamond

"UK scientists have created an everlasting battery in a diamond

First look at jet-powered VTOL X-plane for DARPA program

First look at jet-powered VTOL X-plane for DARPA program

Billions of People Could Benefit from This Breakthrough in Desalination That Ensures...

Billions of People Could Benefit from This Breakthrough in Desalination That Ensures...

Tiny Wankel engine packs a power punch above its weight class

Tiny Wankel engine packs a power punch above its weight class

Google Versus xAI AI Compute Scaling

xAI has already trained Grok 3 with 100,000 Nvidia H100s but has not released it yet. xAI has added 100,000 chips and will train Grok 4 with 200,000 Nvidia H100s and H200s. Grok 4 will be released in April, 2025. Google and xAI are the leaders in AI compute with over 100,000 GPUs or TPUs used for model training. xAI is scaling to a million GPUs by the end of 2025. Google has that number of TPUs but they may not integrate them in one building or one coherent memory.

Google Gemini 2.0 was trained with Trillium, Google's sixth-generation Tensor Processing Unit (TPU). This custom AI accelerator is now generally available to cloud customers, showcasing Google's commitment to building an extensive computational infrastructure. Over 100,000 Trillium chips have been deployed in a single network fabric, enabling massive-scale AI operations.

Google has millions of TPU chips in multiple buildings and facilities. AI training requires all of chips to be in one network and sharing one memory. We will need to see how Google integrates its many TPU chips into one system for large AI model training.

Nvidia H100s had challenges scaling beyond 30,000 coherent chips for AI training clusters. Google has different chips with different networking capabilities.

Google is keeping pace with xAI in scaling to 100,000 roughly Nvidia H100 class GPU chips for its AI training cluster.

xAI is training Grok 3 with 100,000 Nvidia H100s (releasing January or February) and will train Grok 4 with 200,000 Nvidia H100s (releasing April/May).

xAI Grok 5 is training with 100,000 to 200,000 Nvidia B200s (releasing about August).

The Google AI Training campus shown above already has a power capacity close to 300MW (2024) and will ramp up to 500MW in 2025. Google on the other hand has already deployed millions of liquid cooled TPUs accounting for more than one Gigawatt (GW) of liquid cooled AI chip capacity.

In 2025, Google will have the ability to conduct Gigawatt-scale training runs across multiple campuses, but Google's long-term plans aren't nearly as aggressive as xAI, OpenAI and Microsoft.