Breaking News

Democrats Announce State Of The Union Response Will Be Delivered By Bad Bunny

Democrats Announce State Of The Union Response Will Be Delivered By Bad Bunny

American Attack on Iran: World War

American Attack on Iran: World War

If You Think the US Wants To Bring Democracy to Iran, Watch What They're Currently Doing to Iraq

If You Think the US Wants To Bring Democracy to Iran, Watch What They're Currently Doing to Iraq

The Scary Truth About Living in Big Cities During the Turbulent Times Ahead

The Scary Truth About Living in Big Cities During the Turbulent Times Ahead

Top Tech News

New Spray-on Powder Instantly Seals Life-Threatening Wounds in Battle or During Disasters

New Spray-on Powder Instantly Seals Life-Threatening Wounds in Battle or During Disasters

AI-enhanced stethoscope excels at listening to our hearts

AI-enhanced stethoscope excels at listening to our hearts

Flame-treated sunscreen keeps the zinc but cuts the smeary white look

Flame-treated sunscreen keeps the zinc but cuts the smeary white look

Display hub adds three more screens powered through single USB port

Display hub adds three more screens powered through single USB port

We Finally Know How Fast The Tesla Semi Will Charge: Very, Very Fast

We Finally Know How Fast The Tesla Semi Will Charge: Very, Very Fast

Drone-launching underwater drone hitches a ride on ship and sub hulls

Drone-launching underwater drone hitches a ride on ship and sub hulls

Humanoid Robots Get "Brains" As Dual-Use Fears Mount

Humanoid Robots Get "Brains" As Dual-Use Fears Mount

SpaceX Authorized to Increase High Speed Internet Download Speeds 5X Through 2026

SpaceX Authorized to Increase High Speed Internet Download Speeds 5X Through 2026

Space AI is the Key to the Technological Singularity

Space AI is the Key to the Technological Singularity

Velocitor X-1 eVTOL could be beating the traffic in just a year

Velocitor X-1 eVTOL could be beating the traffic in just a year

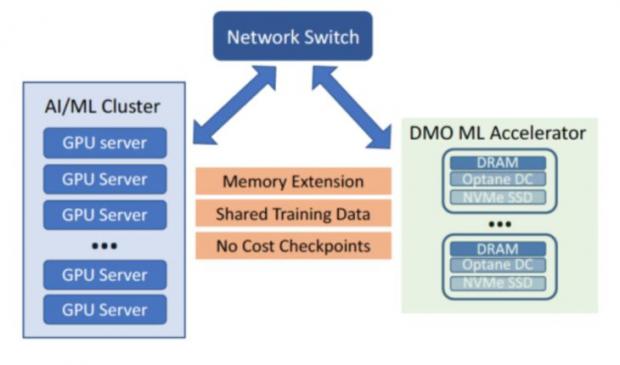

Beyond Big Data is Big Memory Computing for 100X Speed

This new category is sparking a revolution in data center architecture where all applications will run in memory. Until now, in-memory computing has been restricted to a select range of workloads due to the limited capacity and volatility of DRAM and the lack of software for high availability. Big Memory Computing is the combination of DRAM, persistent memory and Memory Machine software technologies, where the memory is abundant, persistent and highly available.

Transparent Memory Service

Scale-out to Big Memory configurations.

100x more than current memory.

No application changes.

Big Memory Machine Learning and AI

* The model and feature libaries today are often placed between DRAM and SSD due to insufficient DRAM capacity, causing slower performance

* MemVerge Memory Machine bring together the capacity of DRAM and PMEM of the cluster together, allowing the model and feature libraries to be all in memory.

* Transaction per second (TPS) can be increased 4X, while the latency of inference can be improved 100X