Breaking News

DeepSeek will SHATTER AI Barriers with V4 Release

DeepSeek will SHATTER AI Barriers with V4 Release

The Disposable Home Epidemic: Why New Houses Are Built to Rot

The Disposable Home Epidemic: Why New Houses Are Built to Rot

They Studied All Fasting Lengths, This Dropped the Most Fat (12hr, 16hr, 24hr, 36hr)

They Studied All Fasting Lengths, This Dropped the Most Fat (12hr, 16hr, 24hr, 36hr)

Elon Musk says 'WOW' – Democrat California is going to be 100x the Democrat fraud of Minneso

Elon Musk says 'WOW' – Democrat California is going to be 100x the Democrat fraud of Minneso

Top Tech News

NASA announces strongest evidence yet for ancient life on Mars

NASA announces strongest evidence yet for ancient life on Mars

Caltech has successfully demonstrated wireless energy transfer...

Caltech has successfully demonstrated wireless energy transfer...

The TZLA Plasma Files: The Secret Health Sovereignty Tech That Uncle Trump And The CIA Tried To Bury

The TZLA Plasma Files: The Secret Health Sovereignty Tech That Uncle Trump And The CIA Tried To Bury

Nano Nuclear Enters The Asian Market

Nano Nuclear Enters The Asian Market

Superheat Unveils the H1: A Revolutionary Bitcoin-Mining Water Heater at CES 2026

Superheat Unveils the H1: A Revolutionary Bitcoin-Mining Water Heater at CES 2026

World's most powerful hypergravity machine is 1,900X stronger than Earth

World's most powerful hypergravity machine is 1,900X stronger than Earth

New battery idea gets lots of power out of unusual sulfur chemistry

New battery idea gets lots of power out of unusual sulfur chemistry

Anti-Aging Drug Regrows Knee Cartilage in Major Breakthrough That Could End Knee Replacements

Anti-Aging Drug Regrows Knee Cartilage in Major Breakthrough That Could End Knee Replacements

Scientists say recent advances in Quantum Entanglement...

Scientists say recent advances in Quantum Entanglement...

Solid-State Batteries Are In 'Trailblazer' Mode. What's Holding Them Up?

Solid-State Batteries Are In 'Trailblazer' Mode. What's Holding Them Up?

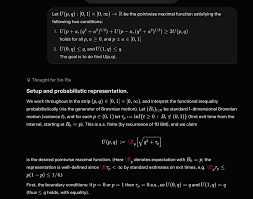

XAI Grok 4.20 and OpenAI GPT 5.2 Are Solving Significant Previously Unsolved Math Proofs

Demonstrates AI generating novel math objects (Bellman functions for optimal control) quickly, linking to isoperimetric profiles and Takagi function (RH-related). It advances understanding of square function instabilities without changing the world immediately, but highlights AI's role in "small steps" toward deeper insights in stochastic processes and analysis.

There have also been solving of decades old open significant math problems solved by OpenAI GPT 5.2.

Paul Erd?s, the prolific Hungarian mathematician, posed over 1,000 open problems across fields like combinatorics, number theory, and graph theory. Many remain unsolved, with some offering cash prizes. Recently, large language models (LLMs) like OpenAI's GPT-5.2 (released in late 2025) have made headlines for contributing to solutions, often autonomously or with minimal human guidance. These are tracked on the erdosproblems.com site, maintained by mathematician Thomas Bloom, and verified by experts like Terence Tao (Fields Medalist).

Overall, since Christmas 2025, 15 Erd?s problems have been marked solved with 11 crediting AI involvement. Tao's analysis is that there are 8 cases of meaningful autonomous AI progress, 6 where AI built on prior research.

AI doesn't just generate initial proofs — it excels at iteratively refining them. For instance, in the process of turning raw AI-generated arguments into full research papers, tools like GPT can

– automatically rewrite sections for better clarity.

– Adjust phrasing, variable choices, or logical flow.

– Incorporate historical context, literature references, and natural-language explanations.

– Produce multiple drafts quickly, reducing the "feel of a generic AI-produced document" to something approaching acceptable research-paper quality.

In one specific example Tao referenced (around the autonomous solve of Erd?s #728), the collaboration led to a new writeup of the proof that he judged as within ballpark of an acceptable standard for a research paper, with room for improvement but far beyond initial raw output.

Tao describes this as shifting proof-writing toward a search problem at scale. AI can generate thousands of mini-lemmas, variations, or exposition styles, then use checkers (like Lean) to validate and cull the weak ones, while humans focus on high-level direction. He calls this "vibe-coding" or rapid iteration — complementary to human strengths. There is useful vibe-math proofing. Enabling more possibilities to be considered more rapidly. A good human mathematician can have math research enhanced and sped up.